Yet another (but maybe simpler) way of framing the block size debate:

We should first acknowledge that are indeed benefits associated with a constraining block size limit -- not necessarily

net benefits, but certainly "all-else-equal"-type benefits. The most prominent examples of these benefits are the reduced cost of running a full node and the reduced relative importance (and thus, theoretically, the reduced "centralizing" effect) of large miners' "self-propagation advantage" vis-a-vis orphaning risk.

Unfortunately, "all else is never equal" and there are also very significant

costs associated with a constraining block size limit: higher fees, less predictable fees, slower and/or less predictable confirmation times, and lower on-chain throughput forcing users to use

"off-chain" solutions that are inherently riskier.

Obviously, moving from a block size limit of 0 kb (Bitcoin doesn't exist) to a block size limit of maybe 10 kb represents a situation where the benefits outweigh the costs. Similarly, almost everyone agrees that moving from a block size limit of 10 kb (allowing for perhaps a literal handful of transactions per block) to the current 1-MB limit represents a significant net benefit.

The question is whether or not there's a point at which the marginal costs of increasing the block size limit begin to outweigh the marginal benefits. If so, that transition point is obviously going to be the optimum place to set the limit. Of course, we should note the possibility that the answer to this question is actually no, because at a certain point the benefits of an increased consensus-type limit go to zero. Why? Because the consensus-type limit ceases to have any effect at the point at which it becomes greater than the natural, equilibrium block size limit. These are the conditions under which Bitcoin operated for several years when the 1-MB limit was very large relative to actual transactional demand.

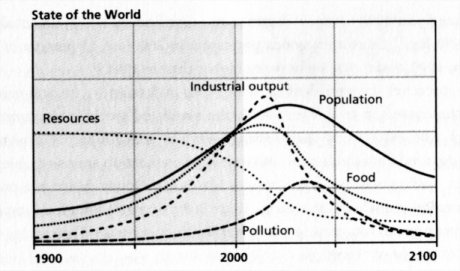

But let's assume for the moment that the answer to that question is yes and thus that a constraining block size limit makes sense. In terms of thinking about where that limit is likely to be, I think it's important to note that the

costs of a particular limit should be expected to

increase over time as adoption and transactional demand increase. Meanwhile, the

benefits of a particular limit should be expected to

decrease over time as a result of a) general technological improvements in, e.g., storage / bandwidth and b) Bitcoin-specific advances like Xthin / Compact Blocks. So you've actually got two forces working together to increase where the optimum limit is going to fall over time (again, assuming such a limit is even needed in the first place), which should really drive home the utter insanity of the "1MB4EVA" position.