@lunar

>I'm firmly in the nChain camp on this one, and will vote accordingly. I would very much like to see the protocol uncapped and locked down w.r.t. to the economics at V0.1, and give it the respect and chance it deserves to fulfil Satoshis Vision.

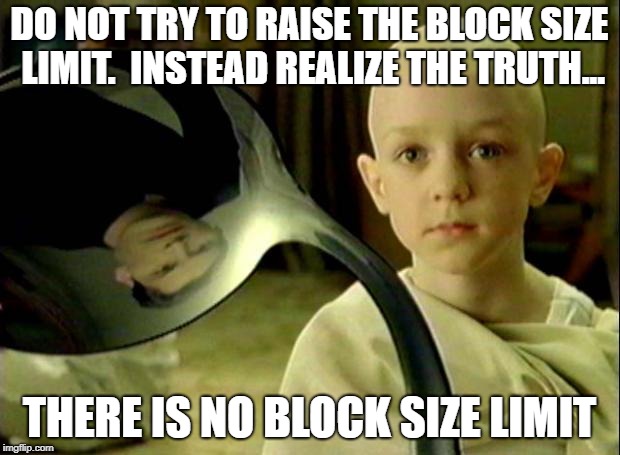

this is my feeling as well, altho i won't say i'm in "nChain's camp". but, in fact, i've decided i'm going to take an entirely different tact by supporting Bitcoin SV for now (being in Coingeek's camp), a miner generated implementation. i'm honestly tired of all the bickering btwn the status quo implementation devs who don't have nearly the skin in the game as these large miners and have a huge penchant for complexity. i believe i've been able to foresee many of these problems with my pessimistic memes of "devs gotta dev" & "the geeks fail to understand that which Satoshi hath created" which go back years to the beginning of this thread. so i'm at least consistent even if you disagree with me. i give high marks to Coingeek who solely mines BCH to the exclusion of BTC, as well as to Ayre who has put up $millions of his own money to take this huge risk. he is a natural born rebel/libertarian who hides offshore and whose philosophical priority on blocksize unlimiting aligns with my highly consistent and years long push to uncap tx throughput onchain and to fulfill Satoshi's original vision to it's end (unlimited blocksizes), whether it be by success or failure. this is not to denigrate BU, whose devs i'm sure i would like personally if i ever got around to meeting them, but have consciously resisted, and whom i trust to be honestly pushing what they feel is right for the protocol to the exclusion of proprietary interests. unfortunately, the push for complexity and economic goals i fail to understand, and for the unfortunate 3x node bug crashes from xthins, has forced me to reconsider support. this pains me as i helped conceive BU, heavily promoted it, and contributed monetarily to establishing it's original 5-6 worldwide testing nodes. i must say tho that i could change my mind from now until November as things continue to be fluid in this space. good luck to all.

[doublepost=1535037677][/doublepost]as far as nChain's involvement with SV, i don't see it as a problem for now. the proposed upgrade is limited to old Satoshi op_codes, 128MB blocks, and lifting of a couple of other basic protocol parameters. nothing specifically proprietary from nChain, afaict. sure, i bet CSW will push Ayre to support certain patented tech in the future that could be inserted into the protocol, so we'll have to see. but i'm confident Ayre won't be doing anything highly detrimental to the overarching BCH philosophy just to placate CSW. as it is, it's controversial as to what detrimental effect CSW's patents would even have on BCH if he's specifically allowing free and open use to those wanting to employ them on BCH while at the same time enforcing his rights to keep potentially valuable tech out of the hands of non BCH implementations.

if you'll notice, i've never once made a comment or taken a position about CSW's personality afflictions or claims. the only mention i've made about him in a roundabout way was in regards to the patents and his apparent plagiarism; which in general i'm opposed to as concepts. thus, no one can claim i'm a CSW fanboy.